This is the first part of the Google SEO guide and it discusses Technical SEO. This is an essential and important step to perform before reaching the stage of Content Optimization and On-Page SEO described in part two of the guide.

So if you’re interested in optimizing your WordPress website on search engines by yourselves, this guide is intended for you. Whether you own a thriving business, are site administrators, or SEO experts, you’ll find in this series of guides all the necessary information for optimizing your site on Google.

Implementing the recommendations in this guide may not necessarily propel you to the top spot in Google search results, but I’m confident it will assist search engines in properly crawling your site’s content and understanding its structure and hierarchy optimally.

So let’s start by explaining what Technical SEO is…

What is Technical SEO?

Technical SEO is a highly important stage in any website’s SEO efforts. If your site has technical SEO-related issues, your optimization efforts, whether done with an SEO agency or not, might not yield the desired results or realize the maximum potential of those efforts.

The good news is that after conducting a Technical SEO audit and fixing issues (or potential issues) found in this audit, you won’t need to perform these actions again.

So, what is Technical SEO, you ask? – Technical SEO refers to the optimization process for your site in preparation for the crawling and indexing stage by Google (and other search engines). Through Technical SEO, you assist Google in accessing, crawling, parsing, and indexing your site without issues and in the most accurate manner.

The process is technical in nature as it doesn’t involve any aspects related to site content. The primary goal of Technical SEO is to optimize the site’s infrastructure.

To understand the complete picture, take a look at the diagram below depicting the three pillars of the SEO process: Technical SEO, On-Page SEO & Off-Page SEO:

On-Page SEO is the process related to content and how to make it relevant to what users are searching for. In contrast, Off-Page SEO (also known as link building) is the process of creating links from other sites to build authority (or Trust) in the site ranking process.

As you can see in the diagram, there are no clear boundaries between Technical SEO, On-Page SEO, and link building, as they work together to achieve full optimization of your site in terms of SEO.

Technical SEO | Recommended Actions

So, what can you do to improve technical SEO on your site? Here’s a list of actions to focus on, and it would be wise to perform these actions before the On-Page SEO and content optimization stage.

Prior to these actions, let’s present several relevant terms for this guide:

- Index – In the context of SEO, index is another term for the database of search engines. The index contains the information of all sites that Google managed to find. If a site isn’t in the index, users won’t be able to find it.

- Googlebot – The general name for Google’s crawler, sometimes referred to as Spider or Crawler. In other words, software developed by Google for scanning web pages.

- SEO – Search Engine Optimization – the process of improving the site for search engines and also the description of the role of the person responsible for performing this action.

- Link Equity – A ranking factor in search engines based on the idea that certain links pass value and “authority” from one page to another. Also called ranking power or sometimes “Link Juice.”

- SERP – A term describing the results in the search engine – Search Engine Results Page.

- Crawl Budget – The number of pages that Google crawls on your site each day (limited budget).

1. Maintain Proper Site Hierarchy

Every site has a homepage, which typically has the highest visit rates on the site and serves as the starting point for many visitors (except in cases where a site contains a small number of pages). Consider how you can improve and facilitate navigation for users moving from a general or category page to a more specific content page.

Furthermore, you should ask yourselves if there’s a sufficient number of pages related to a specific field or topic that justifies creating an archive page that contains and groups these pages. Do you have hundreds of different products that need to be categorized into multiple category and sub-category pages?

These actions will help maintain a proper hierarchical structure and should be considered during site planning for the sake of search engine crawling and visitor navigation on your site.

Hierarchy and Site Structure – Important Points

- The ideal site structure resembles a pyramid, with the homepage at the top and categories beneath it. For larger sites, use sub-categories to segment posts, products, and pages.

- Try to keep categories of similar sizes. If one category is significantly larger than the others (in terms of post and content quantity), consider dividing it into two.

2. Use the Properly Structured Navigation Menu

The most suitable navigation menu is the one that provides the best user experience. Navigation on the site (main menu) has an essential role and assists visitors in easily finding the desired content.

A properly structured menu can also help search engines understand which content you prioritize and wish to promote. So, even though search results are displayed at the page level, Google considers understanding the page’s role within the broader context of the site’s hierarchy, connecting back to the previous section.

Main Site Navigation Menu – Emphasized Points

- Prioritize user experience in navigation on the site, and only then optimize for SEO.

- Categorize content hierarchically with logic for the user.

- Add site categories to the navigation menu if relevant.

- Avoid using JavaScript for creating links.

- Navigation should be text-based, not images.

3. Use Breadcrumbs

Breadcrumbs (navigation path) are a type of navigation element that describes a specific hierarchy on websites and is usually found at the top of the page.

Breadcrumbs allow visitors to your site to quickly navigate back to the previous page or the homepage. Google and search engines also appreciate breadcrumbs, as they assist users on your site, helping search engines understand the site’s structure and hierarchy.

In breadcrumbs, the most general page (usually the base page) appears as a link on the far right, with a breakdown of more specific sections to its left. This is especially true for sites in Hebrew or any language read from right to left.

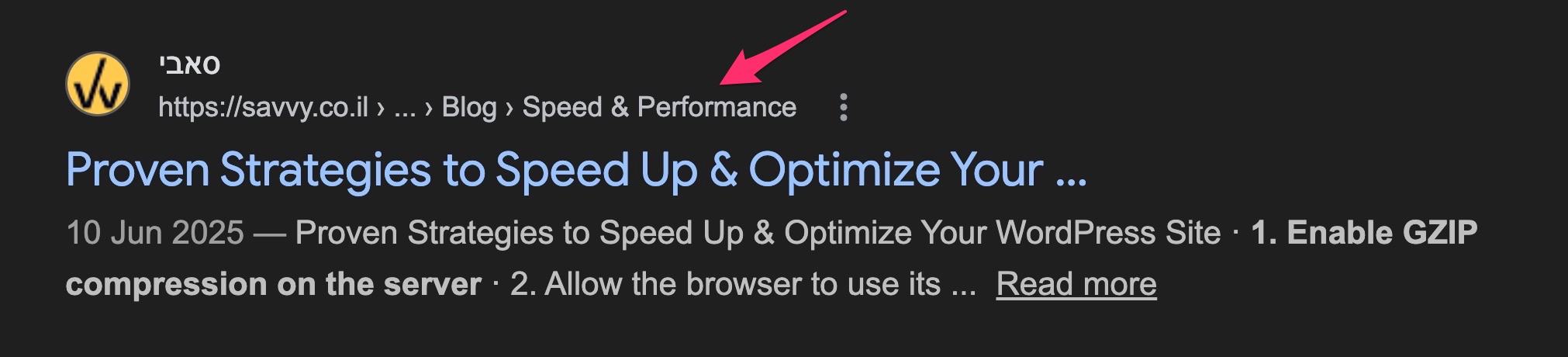

It’s recommended to use structured data and schema for breadcrumbs to indicate to Google that it’s a navigation path. This helps Google include it under the links to your site in search results, as shown in the following image:

Breadcrumbs – Key Points

- Breadcrumbs do not replace the main menu on the site.

- Breadcrumbs should appear at the top of the site, usually below the main menu and above the title.

- Always display all stages in the hierarchy, don’t skip a stage as it might confuse users.

If you have a WordPress site, take a look at the following guide that explains how to add breadcrumbs to these sites and delves deeper into the topic.

4. Build Internal Links Correctly

Internal links play an integral SEO role on Google and search engine optimization strategy. An internal link is one that points to the same domain it’s located on. In simple words, it’s a link that points to another page within the same site.

Useful internal links help create an “architecture” for a specific site, allowing the distribution of link equity between internal pages under the same domain. Moreover, they enable users to navigate the site, discover new content, and help establish a kind of information hierarchy on your site.

An optimal site structure resembles a pyramid, similar to the hierarchical structure of the site mentioned earlier. Consider the main point at the top, which is the home page:

In this structure, there’s a minimal number of links between the home page and each other page on the site, allowing link equity to flow to all pages. This increases the ranking potential of each page on Google.

Internal Links – Important Points

- Create quality content, and a good internal link strategy requires good marketing strategy as well. One cannot exist without the other.

- Try to write natural Anchor Text. Strive to write natural text for links without excessive optimization. However, try to include relevant keywords in this text.

- In general, avoid linking to pages in the upper hierarchy of the site. These pages should appear as links in the main navigation menu.

- Use Follow links for internal links.

- Use a reasonable amount of internal links, there’s no need to overdo it.

- Opinions vary, but try to avoid linking to contact pages at the end of each post (unlike what’s done on this blog).

I’ve written a comprehensive guide discussing internal links and proper strategies. Take a look at the post on Internal Links and Their Importance for SEO.

5. Optimize for Core Web Vitals

Core Web Vitals are a set of metrics that Google uses to evaluate the real-world user experience of your pages. Since 2021, these metrics have been part of Google’s page experience ranking signals, and as of March 2024, the three current Core Web Vitals are:

- Largest Contentful Paint (LCP) – Measures loading performance. A good LCP is 2.5 seconds or less.

- Interaction to Next Paint (INP) – Measures responsiveness to user interactions throughout the page lifecycle. A good INP is 200 milliseconds or less. INP replaced First Input Delay (FID) as a Core Web Vital in March 2024.

- Cumulative Layout Shift (CLS) – Measures visual stability. A good CLS score is 0.1 or less.

Unlike many other ranking factors, Core Web Vitals are measured from real user data (Chrome User Experience Report). You can check your scores using PageSpeed Insights, Google Search Console, or Chrome Lighthouse.

Core Web Vitals act as a tie-breaker between pages of similar quality and relevance. They won’t override strong content and backlinks, but they can give you an edge over competitors with similar content who have poorer performance scores.

6. Don’t Hesitate to Use Outbound Links

Outbound links are one of the ways used by search engines to discover new content. Outbound links are important as they improve the site’s ranking in search engines, build trust with your audience, and even create relationships with other businesses.

Outbound links are an important aspect of SEO as they provide additional value to readers, ultimately enhancing the user experience on your site. We won’t go into detail about outbound links, as I’ve already written an extensive post on the topic (click the link above).

7. Use Short and User-Friendly URLs

Creating categories and filenames that describe the page’s content or category helps organize the site more effectively.

Friendly URLs are easier to understand for those who want to add links to your content. Visitors might be discouraged by especially long website addresses or those that are not intuitive.

For example, compare these two URLs pointing to the same page:

✗ https://example.com/index.php?cat=7&id=120&ref=nav

✓ https://example.com/blog/technical-seo-guide/The first URL is cryptic and meaningless to both users and search engines. The second is short, descriptive, and includes relevant keywords, making it much more readable and clear.

Some users who link to a page on your site might use the page’s URL as the linked text. Therefore, a website address containing relevant keywords will provide users with additional information about the expected content on that page.

WordPress users (and in general) are invited to check out the post Choosing the Best Permalinks Structure for SEO for more information on the topic.

8. Create a Sitemap (XML Sitemap)

A sitemap or “XML Sitemap” is a file containing a list of all the pages on your site that you want search engines to index and crawl. It’s worth noting that Google will crawl your site even without a Sitemap. However, creating this file makes it easier for Google to find new and general content on your site.

Creating an XML Sitemap ensures that search engines will discover new and updated pages on your site and specify all relevant site addresses for crawling, along with the last modification dates of these pages. You can upload the Sitemap file or submit your XML Sitemap file through Google Search Console.

Creating an XML Sitemap – Important Considerations

- Use tools and plugins that generate the Sitemap automatically.

- Submit the Sitemap to Google using the Search Console.

- Add only the canonical version of the address to the Sitemap.

- Do not add a Noindex-tagged address to the site map.

- For sites with over 50,000 URLs, use a Sitemap Index file that references multiple individual sitemaps, each under the 50,000 URL limit. Segment sitemaps by content type (e.g., posts, products, categories) for better monitoring in Search Console.

9. Use 30X Redirects

To prevent a situation where certain users link (incoming links) to different versions of a page’s URL on your site, causing a split in ranking power, use permanent 301 redirects.

If your site contains identical content accessible through different URLs, you should implement a 301 redirect to the “strong” URL that you’ve decided holds dominance, particularly the one ranked higher in Google’s search results.

30X Redirects – Key Points

- All types of redirects carry a certain level of SEO risk.

- 301 redirects preserve full link equity when properly implemented with relevant 1:1 URL mapping. The destination page should contain equivalent or improved content.

- The best redirect is when the page remains exactly the same except for the URL.

- Avoid excessive chaining of redirects, meaning one address pointing to another, which points to yet another address. Each additional hop increases latency and consumes crawl resources.

- Keep redirects live for at least 12 months to allow Google to fully migrate link equity and for external sites to update their links.

- Redirects for SEO purposes include, among other things:

- Removing Query Strings from the URL.

- Improving folder structure and URL.

- Changing the URL and adding keywords.

- Making the URL more readable for humans.

Here’s a guide explaining how to implement redirects in WordPress (with an emphasis on regular expressions) using the Redirection plugin.

If, for some reason, it’s not feasible for you to perform permanent redirects or any kind of 30X redirect, you can also use canonical URLs to indicate the dominant page among different URLs containing the same content.

10. Use Canonical URLs

A canonical URL or Canonical URL is a way of telling search engines that a specific URL represents the master page of specific content. Using canonical URLs prevents issues arising from duplicate content appearing under multiple addresses. Practically speaking, a canonical tag tells search engines which version of the page you want to appear in search results.

Here’s how a canonical tag is written in the <head> of a specific page:

<link rel="canonical" href="https://example.co.il/master-version/" />

Solving duplicate content issues can be a bit tricky, but here are some important points to consider when using canonical tags.

Canonical Tags – Key Points

- A canonical URL can point to itself. In other words, there’s no restriction against having a canonical tag in page X that points to the URL of page X.

- Since duplicate home pages are common and can be linked to in multiple ways beyond your control, it’s generally a good idea to add a canonical tag on the home page pointing to itself.

11. Use SSL Certificates and HTTPS Protocol

HTTPS is no longer optional – it’s the baseline standard for any website. Google confirmed HTTPS as a ranking signal back in 2014, and since then, adoption has become nearly universal. All major browsers now display a “Not Secure” warning for sites that still use plain HTTP.

Here are the key advantages that SSL certificates and HTTPS provide:

- SSL certificates provide a simple way to protect customer information on your site.

- Google takes HTTPS into account as a ranking signal.

- The padlock icon in the browser’s address bar shows users that they can trust your site with their data.

- HTTPS also allows you to utilize the HTTP/2 protocol (and HTTP/3), which significantly improves loading times through multiplexed connections and header compression.

If your site is still on HTTP, migrating to HTTPS should be your top priority. Beyond the SEO benefit, browsers like Chrome and Firefox actively warn visitors when a site lacks HTTPS, which can increase bounce rates and reduce trust.

12. Improve Site Performance and Loading Time

I discuss improving site performance and loading times extensively in this blog, both in general and specifically for WordPress sites. I won’t go into detail on this topic in the current post, but improving site speed leads to a better browsing experience and is ultimately a significant aspect.

This is also why Google attributes importance to site loading times in your site’s ranking. Since 2021, Core Web Vitals (LCP, INP, and CLS) are the specific metrics Google uses to measure page experience. Furthermore, aside from improving site speed, it also has a positive impact on crawl budget assigned by Google to your site.

Performance Testing Tools

The best free tools for testing your site’s loading time and performance are:

- PageSpeed Insights – Google’s tool that combines lab data (Lighthouse) with real-world field data from the Chrome User Experience Report.

- Lighthouse – Available in Chrome DevTools (Audits panel), as a CLI tool, or through PageSpeed Insights. Provides detailed performance, accessibility, and SEO audits.

- WebPageTest – An advanced, open-source tool for detailed waterfall analysis, multi-step testing, and connection throttling.

13. Optimize Category Pages

Category pages are relatively “weaker” in terms of SEO as they often don’t contain a lot of content. Typically, they include summaries of posts (if it’s a blog) and images and links to the posts themselves.

Add some content at the beginning of the page that describes to users what content they can find in this category. Additionally, it’s a good idea to provide users with a few links at the top of the page (1-3, for instance) that point to strong and relevant posts within the category. These are posts that most likely interest the majority of visitors who land on this category.

Optimizing category pages falls into the gray area between On-Page SEO and Technical SEO. Here’s a comprehensive article I wrote that discusses improving category pages in WordPress for better SEO and higher rankings in Google and other search engines.

14. Add Schema and Structured Data

Structured data (Schema) is code that you can add to your site’s pages to describe the content to search engines better and allow them to understand it more effectively.

Search engines can use this data to present the content in useful ways in search results that attract the attention of users. By using Schema and structured data, you can help search engines analyze the page’s content and determine the relevant customers for your business or product that you’re marketing.

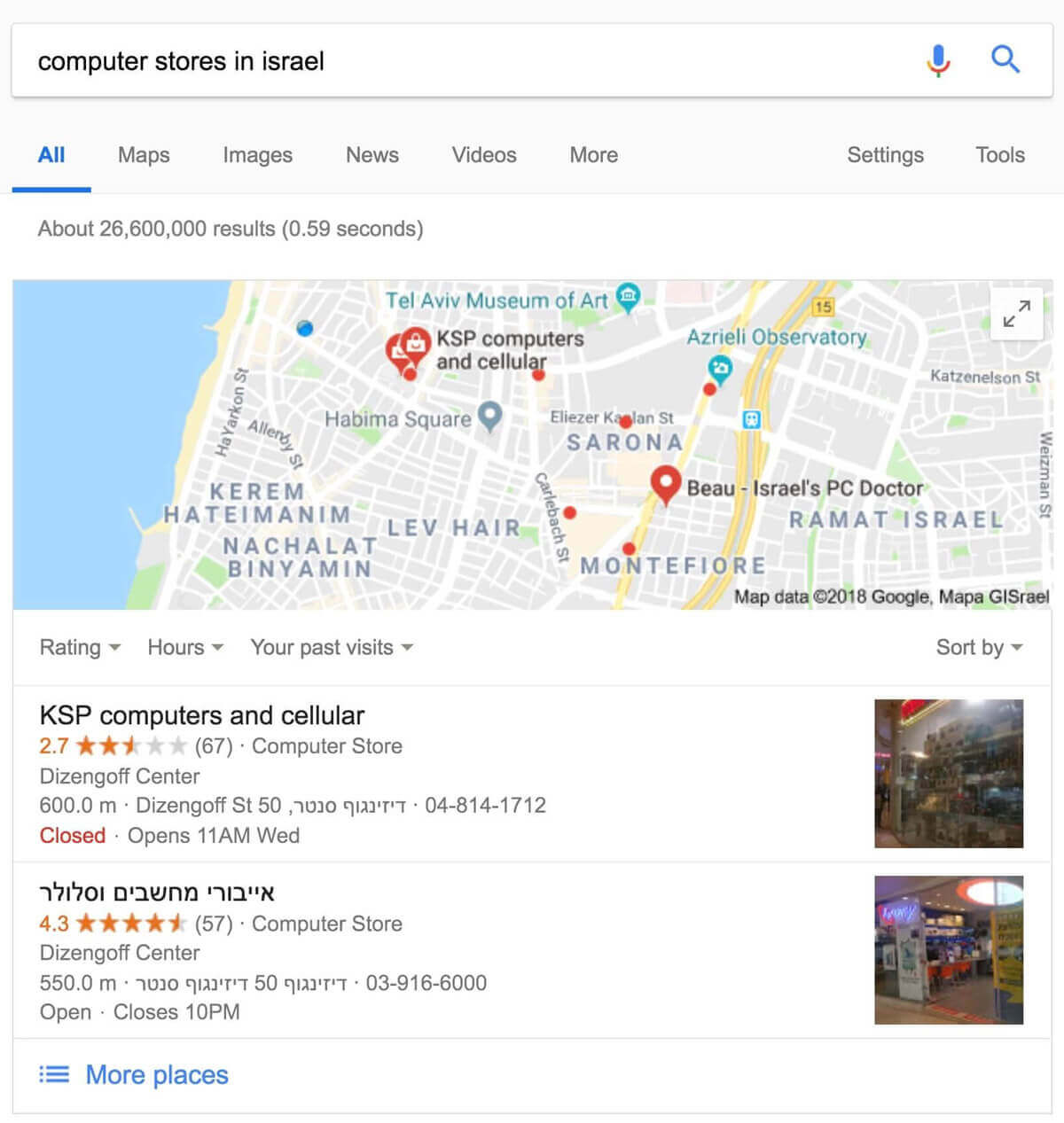

For example, if you have an online store and you add structured data to a single product page, it helps search engines understand that the page displays that particular product along with its price and customer reviews. Google might display this information in the text snippet within search results for relevant queries.

Search results with structured data are also known as “rich results.”

In addition to enhancing advanced search results, Google might use structured data for presenting relevant results in other formats.

For instance, if you have a local business, marking your opening hours with structured data allows potential customers to find you precisely when they need to and know whether your store is open or closed during their search.

Properly embedded structured data allows you to apply various special features to your page’s search results, including star ratings, carousels, features, and unique designs.

You can add or mark structured data using code or with the help of tools available in Google Search Console.

Once you’re done, use Google’s Rich Results Test to verify that your structured data is implemented correctly and eligible for rich results. For general Schema.org validation beyond Google-specific features, you can also use the Schema Markup Validator at validator.schema.org.

Schema and Structured Data – Highlights

- Avoid using invalid structured data. Don’t modify the source code of the site if you’re unsure how to implement structured data properly.

- Avoid creating fake reviews or adding irrelevant structured data to your site’s content.

- Google recommends using JSON-LD for structured data.

Furthermore, you’re welcome to read a more extensive guide I wrote about the topic, explaining Schema and Structured Data and how to add them to WordPress sites.

15. Optimize the robots.txt File

There are many situations where you don’t want certain pages, directories, or files to be crawled because they wouldn’t be useful to users if they appear in Google’s search results.

You can ask Google and search engines not to crawl specific files and directories using a file called robots.txt (post about this file in the link). This file should be located in the root folder of your site, and be sure not to block pages or files that you want to be indexed. Here’s an example of a robots.txt file:

User-agent: *

Disallow: /admin/

Disallow: /private/

Disallow: /directory/some-pdf.pdfIt’s worth noting that the noindex tag, on the other hand, indicates to search engines not to add specific URLs to their index. You can add this tag to the <head> element of the page, like this:

<meta name="robots" content="noindex">Robots.txt – Key Points

- Robots.txt is only a request to prevent scanning of specific pages. If you block certain pages but they are linked from other internet pages, it’s likely you’ll still see these pages in search results.

- The NoIndex tag, on the other hand, completely prevents the display of pages in search results.

- Using Robots.txt, you can block entire directories, while NoIndex allows blocking individual pages only.

- If you don’t want to grant access to a specific page entirely, you should use other security methods like password protection or server-level blocking (for sensitive content, etc.).

It’s worth noting that if your site is on a subdomain and you want specific pages not to be crawled in this subdomain, you need to create a separate robots.txt file for it.

If you’re working with WordPress, there are plugins that allow you to easily specify which content you don’t want to appear in search results. If this is the case, take a look at the guide on setting up the Yoast SEO plugin for WordPress on this blog.

16. Present a Useful 404 Page

There are situations where users arrive at a non-existent page (404 error page) by clicking on a broken link or by entering a wrong URL. The 404 page should guide users back to an active page in a user-friendly manner.

The 404 page can include a link back to the homepage and can also have links to popular or related content on the site, thus improving the user experience. You can use Google Search Console to find the source of site addresses causing 404 errors.

404 Pages – Important Points

- Avoid adding 404 pages to search engine indexes. Ensure the server returns a 404 status code for these pages. For JavaScript-based sites, add a

noindexmeta tag when there’s a request for non-existent pages. - Despite the previous point, search engines should be allowed to crawl and access 404 pages, and they shouldn’t be blocked using the robots.txt file.

- Avoid displaying unclear messages on 404 pages.

- Design 404 pages to match your site’s overall design.

17. Allow Google to View the Page Like a User

When Google scans a specific page, it should see the page exactly as an average user does. To optimize Google’s indexing, allow the Googlebot to access all assets on the site, including JavaScript, CSS, and image files.

If the robots.txt file mentioned earlier prevents the scanning of these resources, it directly affects how algorithms process and add content to the index, potentially leading to suboptimal rankings.

You can verify how Googlebot renders your pages using the URL Inspection tool in Google Search Console. It shows the rendered HTML and a screenshot of how Google sees the page. Additionally, Chrome Lighthouse provides an SEO audit that flags issues like blocked resources or missing meta tags.

18. Adapt the Site for Mobile Devices

Building a mobile-friendly site is critical for your online presence, better rankings, and SEO. Google completed its transition to mobile-first indexing in 2024, meaning it now exclusively uses the mobile version of your site for crawling and indexing. Sites that don’t work on mobile devices will not be indexed at all.

Since over 60% of web traffic now comes from mobile devices, ensuring your site delivers a great mobile experience is no longer optional.

There are three options for creating a mobile-friendly site:

- Responsive design that adapts to all devices (the preferred option).

- Building a separate mobile site with a different URL.

- Dynamic serving – the server provides different HTML and CSS files depending on the device’s needs.

Regardless of your chosen configuration for a mobile-friendly site, there are key points you should consider:

Mobile-Friendly Site – Highlights

- Avoid using annoying pop-ups that cover the screen on mobile devices and hinder user experience. Google’s page experience signals penalize intrusive interstitials.

- Mobile visitors expect the same functionality as the desktop version, such as the ability to interact, make payments, navigate easily, etc.

- Ensure your mobile pages contain the same content as the desktop version. With mobile-first indexing, content that exists only on the desktop version will not be indexed.

- Test your mobile experience using Chrome Lighthouse or PageSpeed Insights, which include mobile-specific performance and usability checks.

FAQs

Common questions about Technical SEO:

robots.txt and a noindex tag?

robots.txt file tells search engine crawlers not to crawl specific directories or files, but it doesn't prevent pages from appearing in search results if they're linked from other sites. The noindex meta tag, on the other hand, instructs search engines not to add a page to their index at all, ensuring it won't appear in search results regardless of incoming links.In Conclusion

Technical SEO involves various tests and configurations that need to be optimized to help search engines crawl and index your site smoothly. In most cases, after you’ve conducted proper technical SEO, you won’t need to deal with these tasks again beyond periodic checks and fixing new issues if they arise.

The term technical suggests that some technical background and knowledge are required to perform some of the actions outlined in this guide (such as improving site speed, adding structured data, etc.), but these are necessary to realize your site’s full potential and achieve higher rankings on Google.

To truly maximize your SEO efforts, it’s also important to understand Google’s E-E-A-T principles – Experience, Expertise, Authority, and Trust. These factors influence how Google evaluates the quality and credibility of your content.

If you’ve completed the technical SEO process, it’s time to move on to part two of the guide and perform content optimization for your site – the process known as On-Page SEO.