HTTP protocol has been around since approximately 1991 and underwent significant upgrades in 1999 when HTTP/1.1 was introduced.

Since the release of this protocol, many articles and guides have been published online to explain how to optimize and overcome the limitations of this protocol in terms of performance, and there are quite a few shortcomings…

Websites such as GTmetrix and WebPageTest, especially as discussed in this guide I wrote, are undoubtedly the tools that provide the final word on testing the loading speed of websites, and in most cases, these tools are excellent. However, some of the recommendations you receive from these tools may not be relevant to the era of HTTP/2.

So, what is HTTP/2, and what’s new in this protocol?

HTTP/2 is a network protocol, the successor of HTTP1.1. Originally known as HTTP2.0, according to W3Techs, around 42.4% of the top 10 million websites use this protocol as of December 2019.

Let’s explore the innovations in the protocol and see how they manifest in terms of performance improvement across websites:

A. Multiplexing

Multiplexing is undoubtedly the most significant feature in HTTP/2 that provides a solution to one of the central problems (head-of-line blocking) in HTTP/1.1.

In brief, the issue is that at any given time, only one request can be received for a specific connection (TCP Connection), causing considerable delays.

In an attempt to bypass this obstacle, browsers try to open multiple connections, allowing them to download several assets in parallel.

However, browsers are limited in the number of connections they can open simultaneously, typically ranging between 2-8 connections, varying by browser, to avoid overwhelming network traffic.

Multiplexing solves these problems by allowing a large number of requests (and responses) over a single TCP connection. This capability enables the browser to start downloading assets as soon as it finds them in the DOM without waiting for an available TCP connection.

Latency is also lower since the handshaking process that occurs when opening a new TCP connection takes place only once for a specific host or domain.

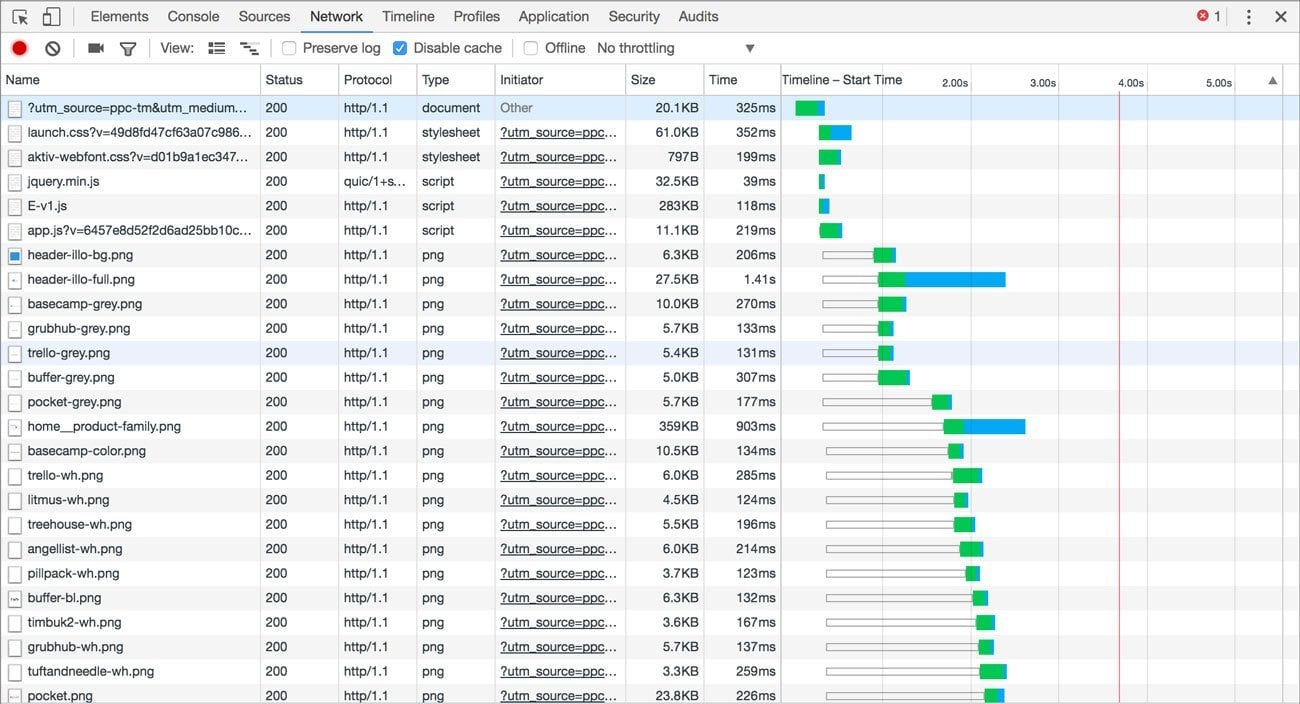

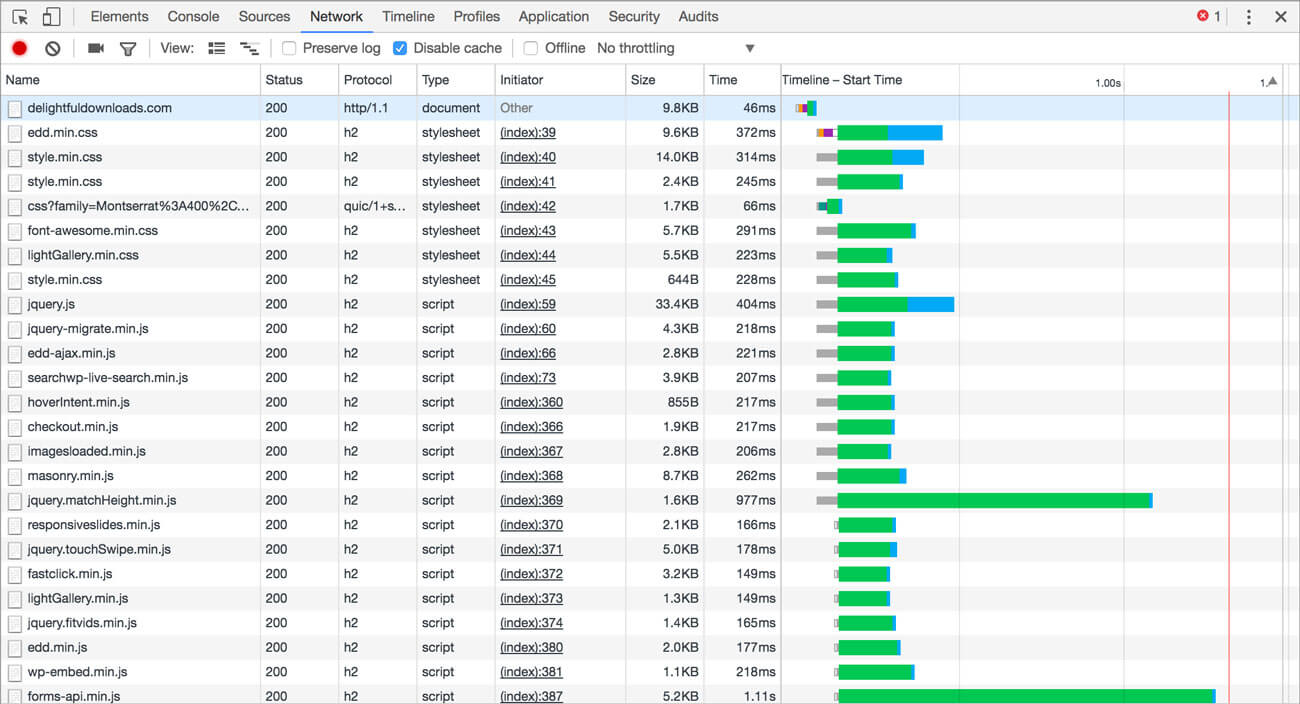

The impact of multiplexing can be seen in the waterfall diagrams below. HTTP/1.1 starts downloading assets only when it has a free TCP connection:

In contrast, HTTP/2 downloads these assets in parallel:

B. HPACK Header Compression

Each HTTP request has a header that allows the server and browser to add additional information to the request or HTTP response. A typical response from https://savvy.co.il will return the following headers:

accept-ranges:bytes

cache-control:max-age=31536000, public

content-length:37534

content-type:image/jpeg

date:Sat, 21 Oct 2017 09:12:12 GMT

expires:Sun, 21 Oct 2018 09:12:12 GMT

last-modified:Sat, 30 Sep 2017 15:42:50 GMT

pragma:public

server:Apache

status:200

x-xss-protection:1; mode=blockHeaders can contain additional information such as cookies or referrers, causing the header to be even larger. In the case where the second request’s header contains additional information, only the delta between them will be sent:

:path: /logo.png

referer: https://savvy.co.il/lp/index.htmlUnlike HTTP1.1, where most assets will contain the same header, it is inefficient to repeatedly send the complete information with each request.

The HTTP/2 protocol utilizes an index that retains the headers received from the initial request it handles. Subsequently, only the index of the identical header is sent (or delta), resulting in savings in unnecessary data traffic, especially for sites with numerous assets.

C. Server Push

In simple terms, when a browser sends a request for a specific page, the server returns HTML as a response. The server then waits for the browser to parse the HTML and make requests for the remaining assets (CSS, JS, & Images) before sending them to the browser. Server Push allows automatic sending of assets that it anticipates the browser will request.

Unlike other features of HTTP/2, this feature requires thought and configuration before implementation. If not implemented properly, it can even lead to a decrease in site performance.

HTTP/2 Works Only on HTTPS

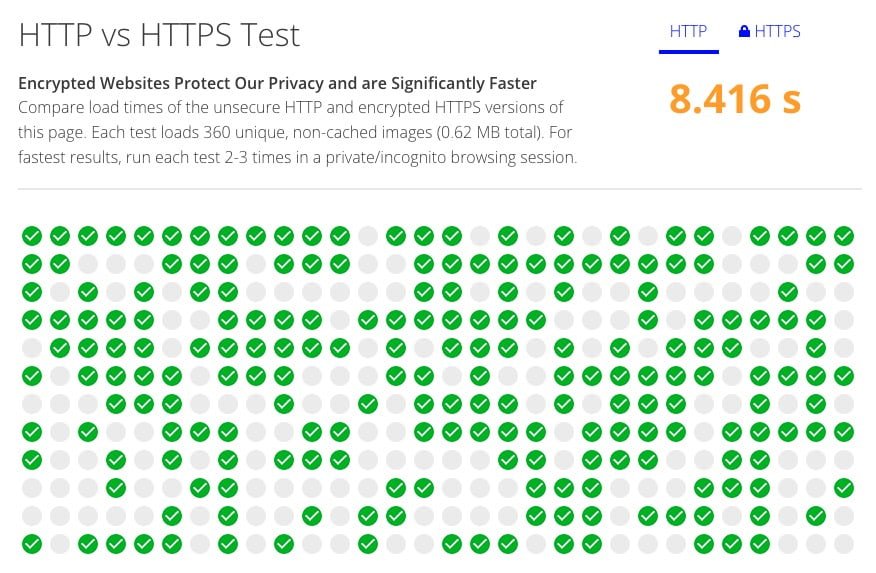

To use HTTP/2, your site must have an SSL certificate and run on HTTPS. Although the HTTP/2 specifications do not explicitly define HTTPS as mandatory, no browser using this protocol operates when the site is not encrypted and secured with an SSL certificate.

The site HTTP vs HTTPS presents an interesting comparison between loading times in the HTTP/1.1 protocol and loading times in the HTTPS protocol using HTTP/2. In this case, HTTP/2 is faster by 80% compared to HTTP/1.1:

Moved to HTTP/2? Pay Attention to the Following…

Most actions required for HTTP/1.1 are still relevant for HTTP/2. However, there are some actions that can potentially affect your site’s speed negatively, or they are irrelevant if you are working with HTTP/2. Let’s take a look at these actions…

1. Concatenate Files

In HTTP/1.1, it was much faster to load a single large file than multiple small files. For this reason, it was common to concatenate JavaScript and CSS files into a single file. However, in the era of HTTP/2, with cheaper HTTP requests, merging multiple files into one is often unnecessary for two main reasons:

- In many cases, the merged file contains components that are not required for a specific page. For example, your blog might load components that are only needed for the homepage.

- A small change in one of the components will cause the merged file not to be served from the cache (the first time the visitor accesses your site).

These reasons may lead to the browser downloading a larger amount of data from the server. However, file concatenation still has its place due to compression ratios. Generally, larger files benefit from better compression, resulting in a smaller amount of data that needs to be downloaded from the server.

However, despite the fact that HTTP/2 requests are more cost-effective, you can see performance improvement through a more logical consolidation. For example:

styles/main.css

styles/blog.cssAs opposed to serving each module separately:

styles/header.css

styles/sidebar.css

styles/footer.css

styles/blog.css2. Image Sprites

Similar to file concatenation, there is no longer a need to combine images into a single image and display each image by changing the background-position in CSS (a very cumbersome process, I must say).

You should exclude SVG files from this equation since an SVG file alone can achieve better compression results. Each case should be examined individually in this situation…

3. Inlining

One method to reduce the number of HTTP requests is inlining. This is useful, especially for small images that can be converted to data-URI and loaded by the browser without making an additional request to the server.

The problem with this technique is that assets of this type are not stored in the browser cache and must be reloaded with each page request.

4. Domain Sharding

Domain Sharding is a technique performed before the era of HTTP/2, designed to “trick” the browser into allowing us to load more assets in parallel. For example, as explained earlier, if you serve assets from your server, the browser can download 2-8 files simultaneously (due to TCP limitations).

On the other hand, if you distribute assets among three different domains, the browser can download 6-24 files in parallel (three times as much). This is why it’s common to see assets and resources linked and loaded from multiple domains:

- cdn1.domain.com

- cdn2.domain.com

- cdn3.domain.com

Avoid this situation when using HTTP/2, as in this case, the multiplexing feature won’t fully exploit its potential.

Summary

Not only is browsing much faster and safer today due to the adoption of HTTP/2, but developers and website owners also find it much easier to optimize and improve the loading speed as well as security of their sites.

There are no drawbacks to transitioning to HTTP/2. All major browsers in the market have supported this protocol for a long time, and those that don’t simply fall back to HTTP/1.1.

WordPress sites that deliver a large number of assets and work with HTTP/2 will experience a significant improvement in site performance. Moreover, these website owners no longer need to worry about file concatenation, distributing assets across different domains, and actions like creating image sprites.